History of the AI

I. Introduction

The roots of AI can be traced back to ancient civilizations’ mythologies and folklore, where there were tales of automatons and mechanical beings.

But, it was in the 17th century that the first advances were made in the creation of machines capable of performing mathematical calculations, such as Pascal’s machine and Leibniz’s machine.

In the 19th century, Charles Babbage designed the first programmable computer, called the “analytical machine”, which is considered one of the precursors of modern AI. Ada Lovelace describes an algorithm for the Analytical Engine to compute Bernoulli numbers. It is considered to be the first published algorithm ever specifically tailored for implementation on a computer, and Ada Lovelace has often been cited as the first computer programmer for this reason.The engine was never completed and so her program was never tested.

II. The Beginnings

However, we can consider that AI as we understand it today begins in the 1940s with the English mathematician Alan Turing.

In 1936 he published his paper “On Computable Numbers” where he introduced the concept of the “Turing Machine“. It is a theoretical device that manipulates symbols on an infinite tape according to a series of rules.

Together with Alonzo Church, he demonstrates that any mathematical algorithm is equivalent to a Turing Machine. Hence it is also known as the “Universal Machine“.

In the same article he demonstrated that the so-called halting problem or decision problem is undecidable, i.e. given a Turing Machine there is no algorithm capable of knowing whether the machine will halt for all programs.

Between 1939 and 1945 he led the team that cracked the code of the Enigma machine used by the Germans in World War II to send encrypted messages.This is the plot of the film directed by Morten Tyldum “The Imitation Game”, 2014.

I. Introduction

The roots of AI can be traced back to ancient civilizations’ mythologies and folklore, where there were tales of automatons and mechanical beings.

But, it was in the 17th century that the first advances were made in the creation of machines capable of performing mathematical calculations, such as Pascal’s machine and Leibniz’s machine.

In the 19th century, Charles Babbage designed the first programmable computer, called the “analytical machine”, which is considered one of the precursors of modern AI. Ada Lovelace describes an algorithm for the Analytical Engine to compute Bernoulli numbers. It is considered to be the first published algorithm ever specifically tailored for implementation on a computer, and Ada Lovelace has often been cited as the first computer programmer for this reason.The engine was never completed and so her program was never tested.

II. The Beginnings

However, we can consider that AI as we understand it today begins in the 1940s with the English mathematician Alan Turing.

In 1936 he published his paper “On Computable Numbers” where he introduced the concept of the “Turing Machine“. It is a theoretical device that manipulates symbols on an infinite tape according to a series of rules.

Together with Alonzo Church, he demonstrates that any mathematical algorithm is equivalent to a Turing Machine. Hence it is also known as the “Universal Machine“.

In the same article he demonstrated that the so-called halting problem or decision problem is undecidable, i.e. given a Turing Machine there is no algorithm capable of knowing whether the machine will halt for all programs.

Between 1939 and 1945 he led the team that cracked the code of the Enigma machine used by the Germans in World War II to send encrypted messages.This is the plot of the film directed by Morten Tyldum “The Imitation Game”, 2014.

In 1950, Turing published a paper titled “Computing Machinery and Intelligence,” in which he proposed the Turing Test as a measure of a machine’s intelligence.

The test involved a human judge engaging in a conversation with both a human and a machine, without knowing which was which. If the judge couldn’t reliably distinguish between the human and the machine based on the conversation, the machine would be considered to have passed the test and exhibited human-like intelligence.

While the Turing Test has its critics and limitations, it has been influential in shaping discussions about AI and machine intelligence.

In 1956, the term “Artificial Intelligence” was coined at the Dartmouth Conference, where pioneers like John McCarthy, Nathaniel Rochester, Marvin Minsky, , and Claude Shannon gathered to discuss the possibilities of creating machines that could simulate human intelligence.

In 1950, Turing published a paper titled “Computing Machinery and Intelligence,” in which he proposed the Turing Test as a measure of a machine’s intelligence.

The test involved a human judge engaging in a conversation with both a human and a machine, without knowing which was which. If the judge couldn’t reliably distinguish between the human and the machine based on the conversation, the machine would be considered to have passed the test and exhibited human-like intelligence.

While the Turing Test has its critics and limitations, it has been influential in shaping discussions about AI and machine intelligence.

In 1956, the term “Artificial Intelligence” was coined at the Dartmouth Conference, where pioneers like John McCarthy, Nathaniel Rochester, Marvin Minsky, , and Claude Shannon gathered to discuss the possibilities of creating machines that could simulate human intelligence.

The early years of AI research were marked by optimism, with researchers believing that they could quickly achieve human-level intelligence in machines.

Frank Rosenblatt is known for his groundbreaking work on the “perceptron,” which played a significant role in the early development of artificial intelligence (AI). The perceptron was one of the first machine learning algorithms for artificial neurons and can be considered a precursor to modern neural networks.

Frank Rosenblatt invented the perceptron in January 1957 while working at the Cornell Aeronautical Laboratory. This invention marked a pivotal moment in the history of AI and machine learning, as it was a fundamental building block of neural networks and laid the foundation for future developments in AI.

The perceptron is often regarded as the first modern neural network. It was designed to mimic the functioning of a single biological neuron, making it an early attempt to create a computational model inspired by the human brain. Rosenblatt described the perceptron as a machine “capable of having an original idea,” highlighting its potential to learn and make decisions based on data.

Rosenblatt’s work on the perceptron paved the way for the development of more complex neural networks and deep learning algorithms, which are the cornerstone of contemporary AI applications.

III. Symbolic AI

During the 1960s and 1970s, AI focused primarily on what is now known as symbolic AI. This approach is based on the idea that all forms of knowledge can be represented by symbols, and that machines can be programmed to manipulate these symbols to solve problems.

In 1966, Joseph Weizenbaum created Eliza, one of the more celebrated computer programs of all time, capable of engaging in conversations with humans and making them believe the software had humanlike emotions.

Stanford Research Institute developed Shakey, the world’s first mobile intelligent robot that combined AI, computer vision, navigation and NLP. It’s the grandfather of self-driving cars and drones.

Nevertheless, progress was slower than expected, leading to what became known as the “AI winter” in the 1970s and 1980s. Funding and interest in AI dwindled during this period.

IV. The Rise of Machine Learning

In the late 20th and early 21st centuries, the field of AI underwent a change thanks to Machine Learning.

Instead of explicitly programming rules and knowledge into machines, researchers began to develop algorithms that allowed machines to learn on their own from data.

This change was driven by the increased availability of large amounts of data and the development of new techniques and algorithms, such as neural networks.

In 1990 Ray Kurzweil predicted that a computer could beat the world chess champion before the year 2000.

He was right.

In 1997, the supercomputer Deep Blue, created by IBM, defeats the World Chess champion Gary Kasparov.

The early years of AI research were marked by optimism, with researchers believing that they could quickly achieve human-level intelligence in machines.

Frank Rosenblatt is known for his groundbreaking work on the “perceptron,” which played a significant role in the early development of artificial intelligence (AI). The perceptron was one of the first machine learning algorithms for artificial neurons and can be considered a precursor to modern neural networks.

Frank Rosenblatt invented the perceptron in January 1957 while working at the Cornell Aeronautical Laboratory. This invention marked a pivotal moment in the history of AI and machine learning, as it was a fundamental building block of neural networks and laid the foundation for future developments in AI.

The perceptron is often regarded as the first modern neural network. It was designed to mimic the functioning of a single biological neuron, making it an early attempt to create a computational model inspired by the human brain. Rosenblatt described the perceptron as a machine “capable of having an original idea,” highlighting its potential to learn and make decisions based on data.

Rosenblatt’s work on the perceptron paved the way for the development of more complex neural networks and deep learning algorithms, which are the cornerstone of contemporary AI applications.

III. Symbolic AI

During the 1960s and 1970s, AI focused primarily on what is now known as symbolic AI. This approach is based on the idea that all forms of knowledge can be represented by symbols, and that machines can be programmed to manipulate these symbols to solve problems.

In 1966, Joseph Weizenbaum created Eliza, one of the more celebrated computer programs of all time, capable of engaging in conversations with humans and making them believe the software had humanlike emotions.

Stanford Research Institute developed Shakey, the world’s first mobile intelligent robot that combined AI, computer vision, navigation and NLP. It’s the grandfather of self-driving cars and drones.

Nevertheless, progress was slower than expected, leading to what became known as the “AI winter” in the 1970s and 1980s. Funding and interest in AI dwindled during this period.

IV. The Rise of Machine Learning

In the late 20th and early 21st centuries, the field of AI underwent a change thanks to Machine Learning.

Instead of explicitly programming rules and knowledge into machines, researchers began to develop algorithms that allowed machines to learn on their own from data.

This change was driven by the increased availability of large amounts of data and the development of new techniques and algorithms, such as neural networks.

In 1990 Ray Kurzweil predicted that a computer could beat the world chess champion before the year 2000.

He was right.

In 1997, the supercomputer Deep Blue, created by IBM, defeats the World Chess champion Gary Kasparov.

It was a 6-game match in which Deep Blue won 3.5 to 2.5. They reached the last game tied at 2.5 points and Deep Blue won the last game. It was a historic moment.

On move 36 of the second game of the match, Garry Kasparov executed a calculated sacrifice, offering Deep Blue the chance to capture two pawns. This sacrifice was a strategic move, setting the stage for a strong counterattack later in the game.

Kasparov understood that computers typically focused on short-term gains and lacked the ability to foresee long-term strategies.

After Kasparov’s move, Deep Blue took a full 15 minutes to process the situation. Deep Blue disregarded the sacrifice and instead moved a pawn. This surprising move not only thwarted Kasparov’s attack but also positioned the computer for a complex, multi-step victory, impressing chess experts in the auditorium.

Kasparov, on the other hand, was astonished by Deep Blue’s move. He realized that he had witnessed a remarkable technological achievement, demonstrating that Deep Blue possessed a form of intelligence required for playing chess at a high level. Nine moves later, facing a seemingly hopeless position, Kasparov resigned, and the match was tied at 1-1.

This pivotal moment in the match showcased the capabilities of Deep Blue and marked a significant milestone in the intersection of chess and artificial intelligence.

In an interview in 1996, Kasparov said: “in some of his (Deep Blue’s) moves I had the feeling of being faced with a new form of intelligence, of witnessing a certain creativity”.

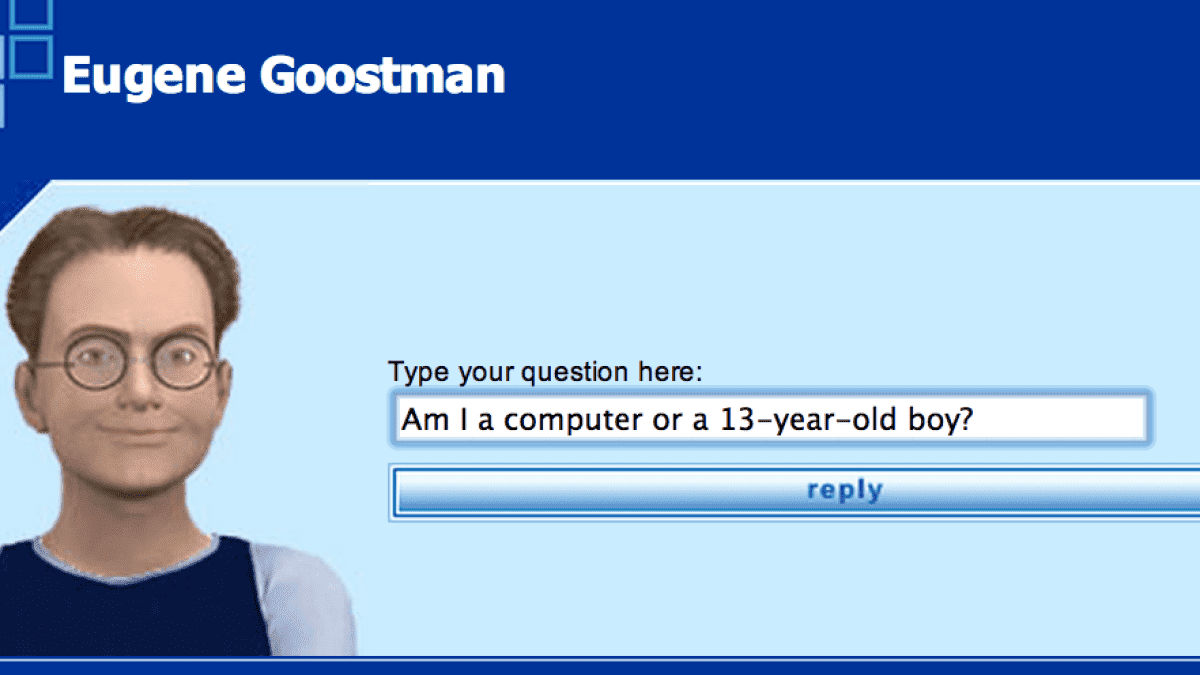

In 2014, an AI passes the Turing test, a computational bot named Eugene Goostman was able to fool 30 of the 150 judges it was subjected to during the Turing test into believing they were talking to a 13-year-old Ukrainian boy.

It was a 6-game match in which Deep Blue won 3.5 to 2.5. They reached the last game tied at 2.5 points and Deep Blue won the last game. It was a historic moment.

On move 36 of the second game of the match, Garry Kasparov executed a calculated sacrifice, offering Deep Blue the chance to capture two pawns. This sacrifice was a strategic move, setting the stage for a strong counterattack later in the game.

Kasparov understood that computers typically focused on short-term gains and lacked the ability to foresee long-term strategies.

After Kasparov’s move, Deep Blue took a full 15 minutes to process the situation. Deep Blue disregarded the sacrifice and instead moved a pawn. This surprising move not only thwarted Kasparov’s attack but also positioned the computer for a complex, multi-step victory, impressing chess experts in the auditorium.

Kasparov, on the other hand, was astonished by Deep Blue’s move. He realized that he had witnessed a remarkable technological achievement, demonstrating that Deep Blue possessed a form of intelligence required for playing chess at a high level. Nine moves later, facing a seemingly hopeless position, Kasparov resigned, and the match was tied at 1-1.

This pivotal moment in the match showcased the capabilities of Deep Blue and marked a significant milestone in the intersection of chess and artificial intelligence.

In an interview in 1996, Kasparov said: “in some of his (Deep Blue’s) moves I had the feeling of being faced with a new form of intelligence, of witnessing a certain creativity”.

In 2014, an AI passes the Turing test, a computational bot named Eugene Goostman was able to fool 30 of the 150 judges it was subjected to during the Turing test into believing they were talking to a 13-year-old Ukrainian boy.

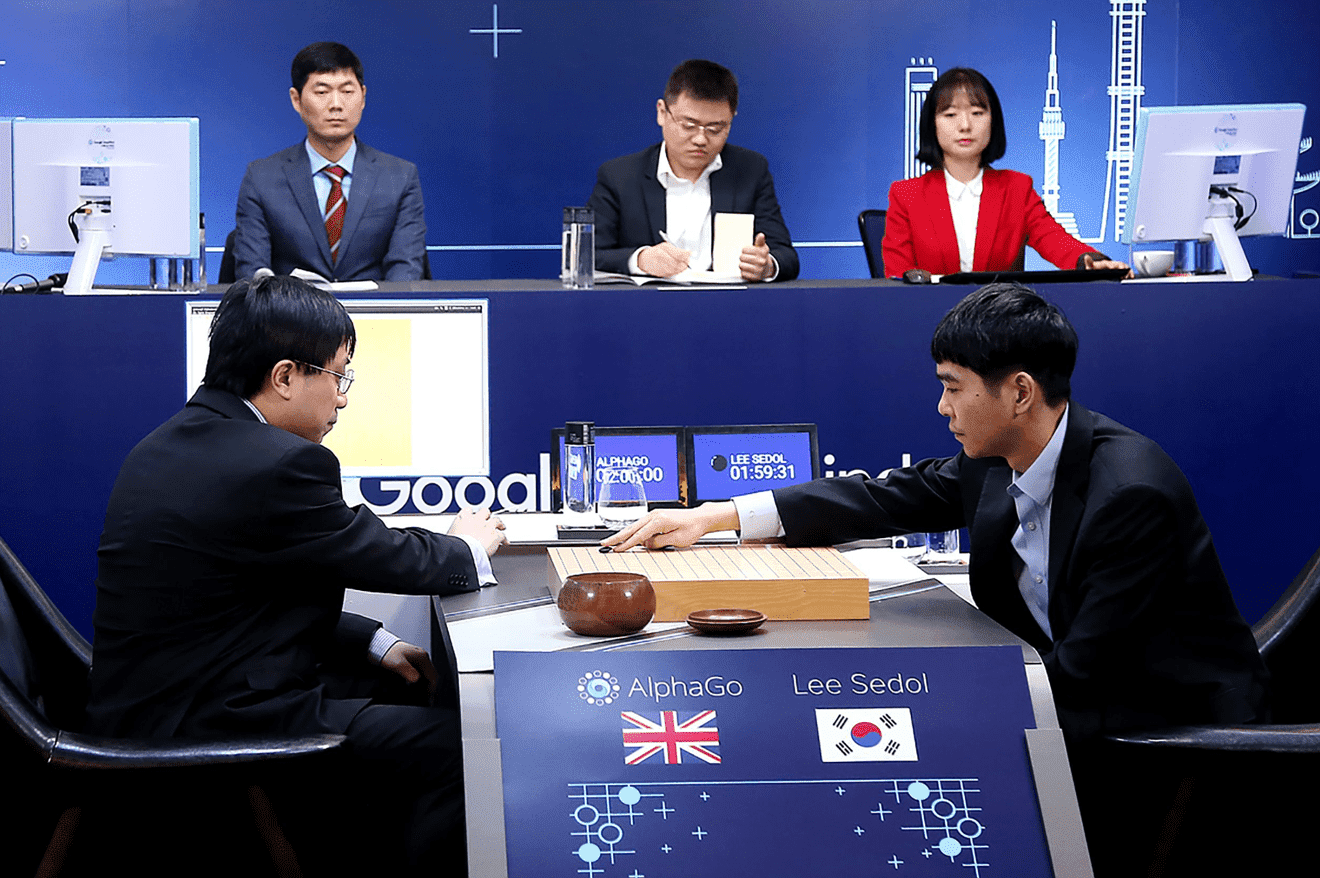

But the most important leap in AI came in 2016 when Google Image’s AlphaGo (DeepMind was bought by Google in 2011) defeated the best GO player at the time Lee Sedol.

GO is a more complex game to analyze than chess. It cannot be done by “brute force” as it has approx. 2×10^170 possible positions (the universe is estimated to contain approx. 2×10^79 elementary particles).

Therefore, they designed a program capable of learning by itself, just as a child would do (through Machine Learning based algorithms). They only had to program the rules of the game, the rest was done by itself.

But the most important leap in AI came in 2016 when Google Image’s AlphaGo (DeepMind was bought by Google in 2011) defeated the best GO player at the time Lee Sedol.

GO is a more complex game to analyze than chess. It cannot be done by “brute force” as it has approx. 2×10^170 possible positions (the universe is estimated to contain approx. 2×10^79 elementary particles).

Therefore, they designed a program capable of learning by itself, just as a child would do (through Machine Learning based algorithms). They only had to program the rules of the game, the rest was done by itself.

V. The Modern Era

- Image and voice recognition.

- Machine translation.

- Programming in any programming language.

- Disease diagnosis.

- Synthesizing new proteins.

- Research tasks such as deciphering languages or ancient manuscripts such as those found in Herculaneum.

- Development of neural prostheses that will be able to cure hitherto incurable diseases (Alzheimer, Parkinson).

- Reading of human brain impulses to trigger actions only through the brain. Neuralink

- Fields such as nanotechnology, bioengineering, etc.

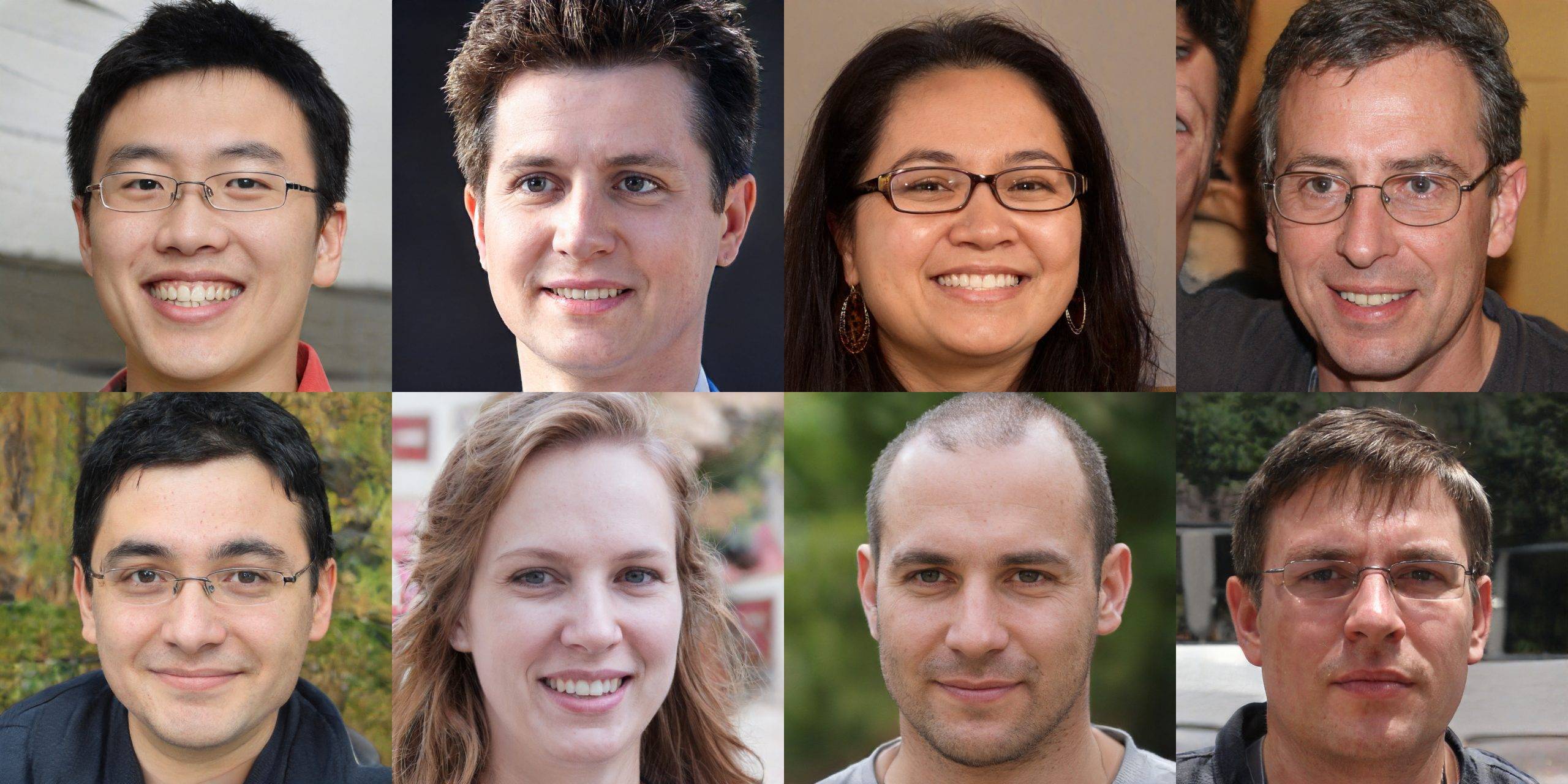

Researches from Google Brain

This paper has had a significant impact on the field of Artificial Intelligence (AI), especially in Natural Language Processing (NLP) and Deep Learning.

The paper introduced the Transformer architecture, a new neural network model for NLP tasks that relies solely on attentional mechanisms to process input sequences.

The attentional mechanisms allow neural networks to selectively focus on specific parts of the input sequence, enabling the model to capture long-term dependencies and contextual relationships between words in a sentence.

Traditional models for NLP tasks, such as recurrent neural networks (RNNs) and convolutional neural networks (CNNs), often struggle to capture long-term dependencies and can be computationally expensive to train, especially for longer sequences.

The authors of the paper argue that attention-based models are superior to traditional models for NLP tasks because they allow the model to selectively attend to different parts of the input sequence, thus capturing important contextual relationships between words.

The Transformer architecture proposed in the paper has become one of the most widely used models for NLP tasks.

The success of the Transformer architecture has inspired further research in attention mechanisms and non-recurrent architectures for stream processing.