What is AI

Artificial Intelligence: Bridging the Gap Between Machines and Minds.

“What is AI?“

This question has been at the forefront of technological and philosophical discussions for decades. Artificial Intelligence (AI) is a multidisciplinary field that seeks to create machines capable of performing tasks that, when done by humans, typically require intelligence. AI is a field with evolving definitions and a strong focus on replicating human intelligence.

While it draws inspiration from the human brain’s structure and functioning, AI differs significantly from human intelligence in terms of consciousness and emotions (at least for now!).

We will explore the diverse definitions of AI, its relationship with human intelligence and how AI models work.

Defining Artificial Intelligence

The definition of AI has evolved over time, reflecting both technological advancements and changing perspectives. One fundamental definition characterizes AI as the development of computer systems capable of performing tasks that would ordinarily require human intelligence. These tasks include problem-solving, learning, reasoning, perception, and language understanding. AI seeks to imbue machines with the ability to think, learn from experience, adapt to new situations, and perform tasks autonomously.

Another definition focuses on AI as a field of study and research that incorporates various subfields like machine learning, natural language processing, robotics, and computer vision. AI researchers aim to create algorithms and systems that can process data, extract patterns, and make decisions in a manner that mimics human cognitive abilities.

Here are several definitions given by AI experts:

John Kelleher, in his book “Deep Learning”, Cambridge, MIT Press 2019 says:

“AI … are computational systems capable of performing tasks that normally require human intelligence.”

That is, tasks where “learning” and “problem solving” are present.

Margaret Boden in “AI: Its Nature and Future,” Oxford University Press, 2016, asserts that AI requires psychological skills

such as prediction, association, planning, perception, anticipation, those that enable humans and animals to achieve their goals.

Other authors, such as Murray Shanahan, in his book “The Technological Singularity”, 2015, propose other elements such as Common Sense and Creativity.

AI vs. Other Computer Software.

AI is distinct from other computer software in several ways. Conventional software follows pre-defined instructions and executes tasks based on explicit programming. In contrast, AI systems can adapt and learn from data, improving their performance over time. Machine learning algorithms, a subset of AI, can discover patterns and make decisions without explicit programming.

Moreover, AI often deals with unstructured data, such as text, images, and audio, and can extract meaningful insights from it. Traditional software typically works with structured data and is not designed for complex data analysis and interpretation.

AI’s Relationship to Human Intelligence and the Brain.

AI’s pursuit of replicating human intelligence raises questions about the relationship between AI and the human brain. Human intelligence is a complex interplay of cognitive processes, including perception, memory, reasoning, problem-solving, and creativity. AI seeks to model these processes through computational means.

One view is that AI is an attempt to reverse-engineer the human brain.

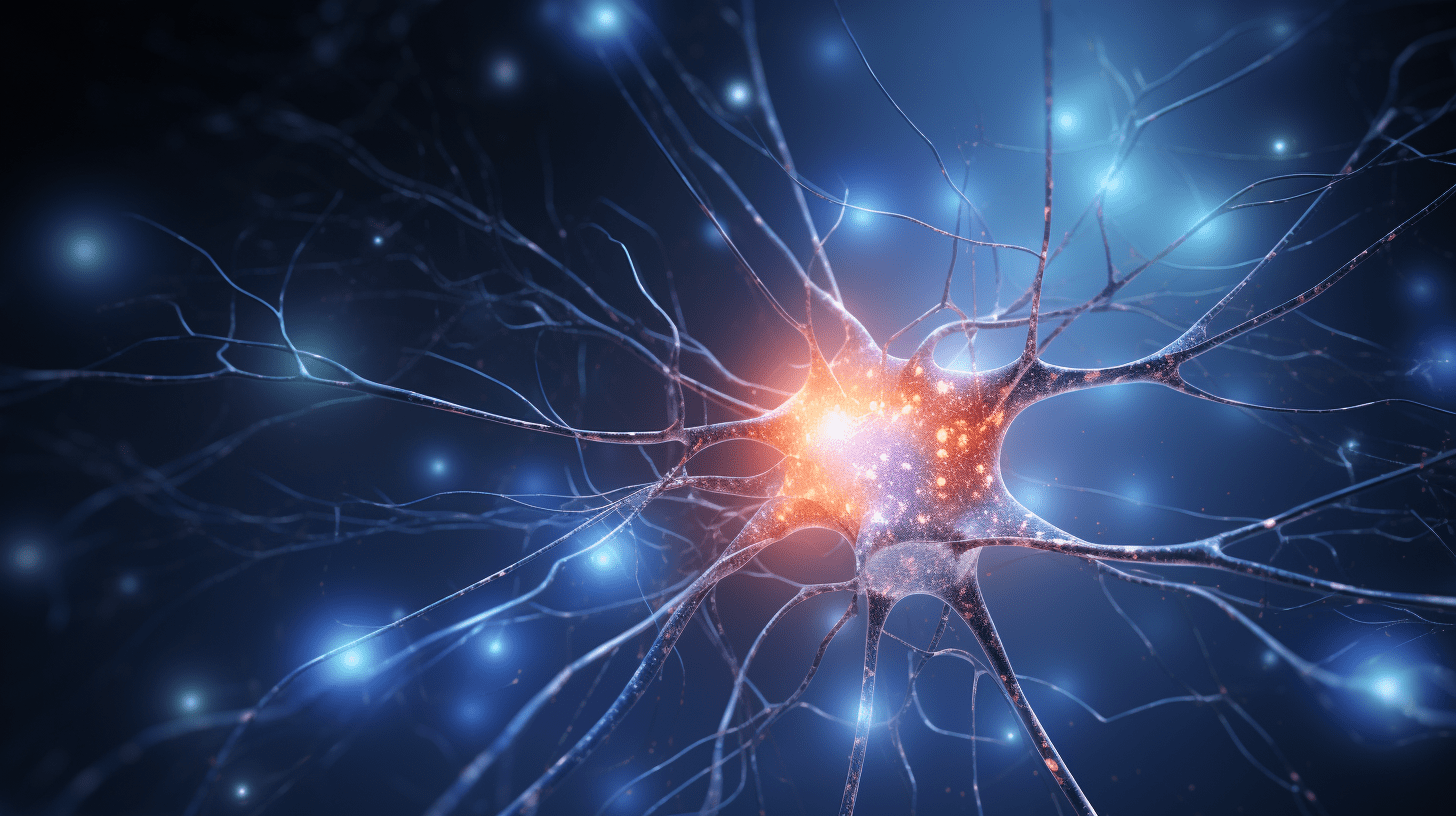

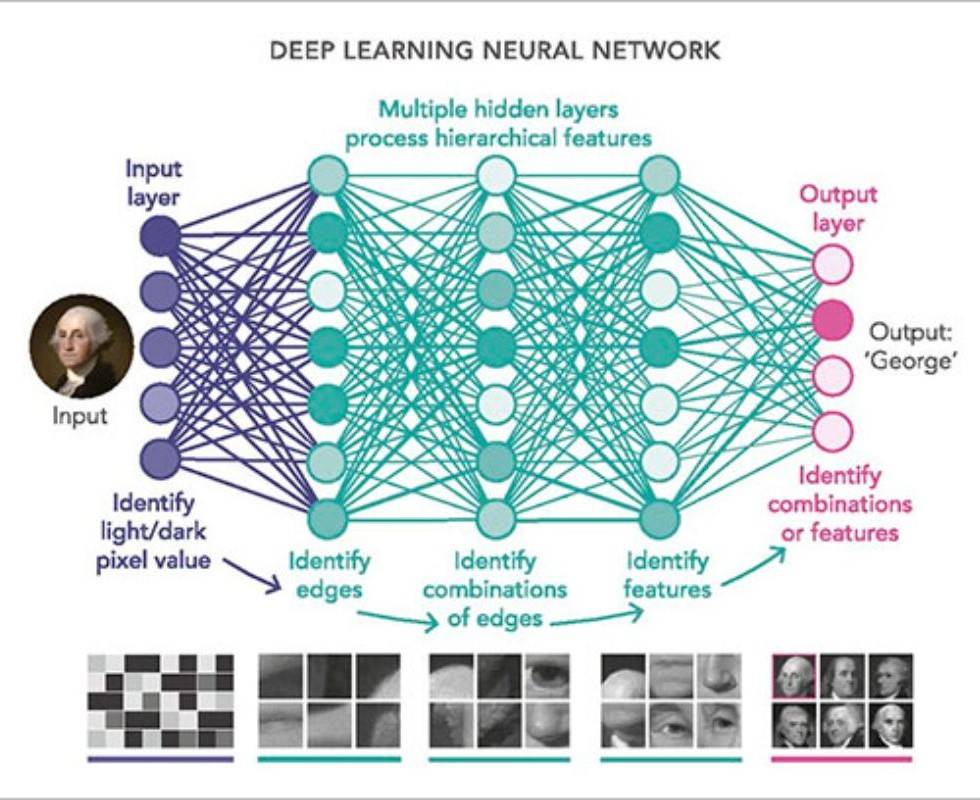

Neural networks, a type of AI model, are inspired by the structure and functioning of biological neurons.

Deep learning, a subset of machine learning, relies on neural networks to analyze data and make predictions, and it has shown remarkable success in various applications such as image and speech recognition.

While AI may be inspired by the brain’s structure, it is important to note that AI models are vastly simplified compared to the complexity of the human brain.

AI also differs from human intelligence in some fundamental ways. AI lacks consciousness, self-awareness, and emotions, which are integral aspects of human intelligence. Human intelligence is deeply intertwined with consciousness and subjective experience, while AI operates solely on algorithms and data processing.

Artificial General Intelligence (AGI): The Quest for Human-Like Intelligence.

We must distinguish between Classical artificial intelligence (CAI), machines that must be programmed to perform a certain task (DeepBlue), the artificial narrow intelligence (ANI), focuses on solving specific tasks and capable of learning by itself from a series of data (AlphaGo) and artificial general intelligence (AGI), that represents the pinnacle of AI development—an aspiration to create machines that possess human-like intelligence across a wide range of tasks.

AGI aims to replicate the cognitive versatility and adaptability seen in humans.

AGI, often referred to as “strong AI” or “full AI“, encompasses systems that possess the ability to understand, learn, and perform any intellectual task that a human being can do.

Unlike narrow AI, which excels in specialized domains like natural language processing or image recognition, AGI strives to exhibit general intelligence. It should exhibit reasoning, problem-solving, and adaptability across various domains and situations.

One of the key distinctions between AGI and other AI systems is the capacity for transfer learning. Transfer learning allows AGI to leverage knowledge and skills acquired in one domain to excel in another, much like how humans can apply their learning from one context to another.

Challenges in Achieving AGI.

Developing AGI is an exceedingly complex and challenging endeavor. The difficulties arise from the need to replicate the full spectrum of human intelligence. Some of the significant challenges include:

- Generalization: Ensuring that an AGI system can generalize its knowledge and adapt to novel scenarios is a formidable task. Humans possess a remarkable ability to apply knowledge from one context to another, even when the situations seem unrelated.

- Common Sense Reasoning: Humans possess a vast reservoir of common sense knowledge that often goes unstated but is critical for making sense of the world. Developing AI systems with robust common sense reasoning abilities remains an ongoing challenge.

- Self-Awareness: Human intelligence is not only about problem-solving but also about self-awareness, consciousness, and emotions. AGI systems have yet to approach anything resembling true consciousness.

- Ethical and Safety Concerns: AGI raises ethical questions about the potential consequences of creating machines with human-like intelligence. Ensuring their responsible use and preventing misuse are paramount concerns.

- Data and Learning: AGI systems would need vast amounts of data and continuous learning capabilities to stay up-to-date and adapt to evolving situations.

The Impact and Implications of AGI.

The development of AGI has the potential to revolutionize nearly every aspect of human life.

AGI could automate complex tasks, accelerate scientific research, enhance healthcare, and assist in addressing global challenges like poverty.

However, the rise of AGI also raises profound ethical, social, and economic questions.

It has the potential to reshape the job market, challenging existing employment structures.

It also brings about concerns related to privacy, security, and the consequences of granting immense decision-making power to non-human entities.

AI Models and Their Foundations.

To understand how AI models work, it’s essential to delve into the foundational technologies that have driven their development.

Several key concepts and techniques have played pivotal roles, including Deep Learning, Neural Networks, Transformers, and more.

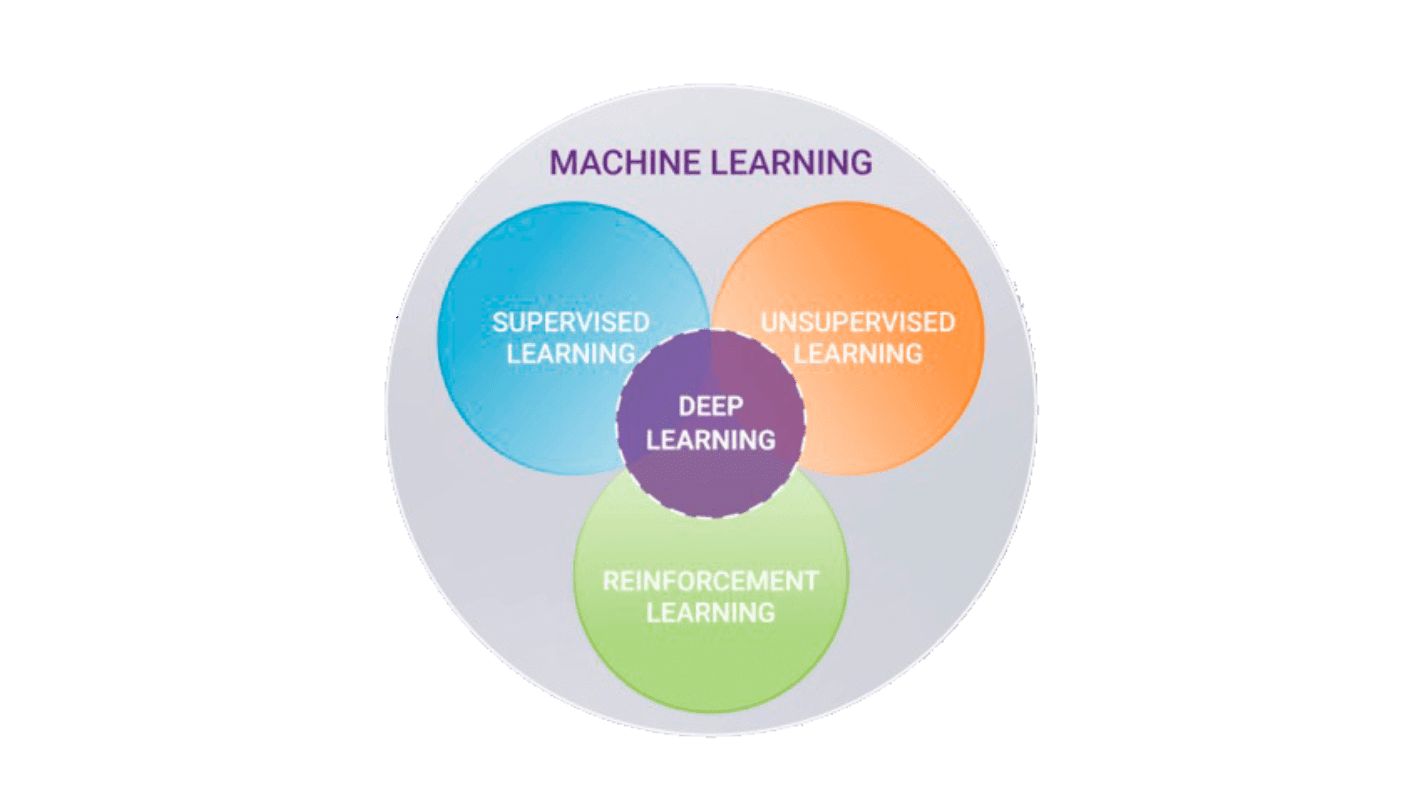

This type of technologies are within the field of what is called Machine Learning.

Machine Learning (ML) is a subset of artificial intelligence (AI) that focuses on developing algorithms and models that enable computers to learn from data and improve their performance on specific tasks over time, without being explicitly programmed. In essence, it’s a way for computers to automatically discover patterns, make predictions, or optimize decisions based on the information they receive.

Here’s a breakdown of what machine learning is and what it’s used for:

1. Learning from Data: At its core, ML is all about learning from data. It uses large datasets to identify patterns, relationships, and trends that are often too complex for humans to discover or program explicitly. These patterns could be in the form of statistical correlations, mathematical functions, or even abstract representations, depending on the specific ML technique used.

2. Generalization: ML models aim to generalize from the data they’ve been trained on. This means they can make accurate predictions or decisions not only on the training data but also on new, unseen data that shares similar characteristics with the training data. Generalization is a crucial aspect of ML because it ensures that the learned knowledge is applicable in real-world scenarios.

3. Types of Machine Learning:

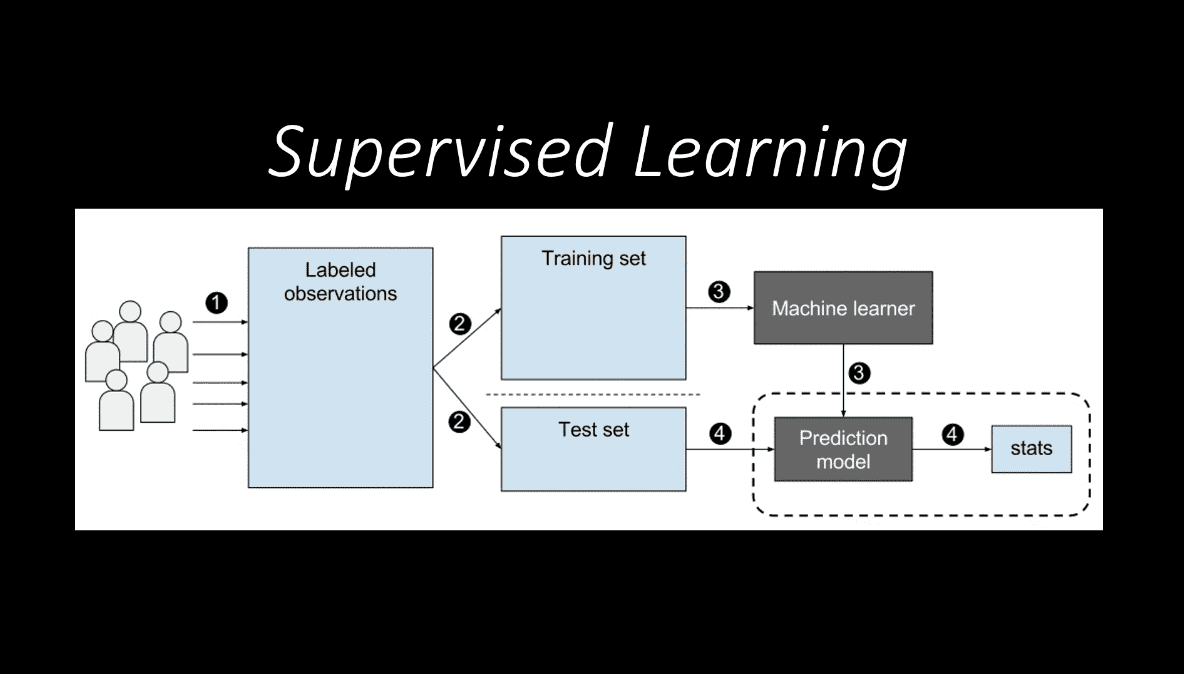

- Supervised Learning: In this type, the ML model is trained on labeled data, which means the input data is paired with corresponding correct outputs. The model learns to map inputs to outputs, making it capable of making predictions on new, unseen data. Common applications include image classification, spam detection, and stock price prediction.

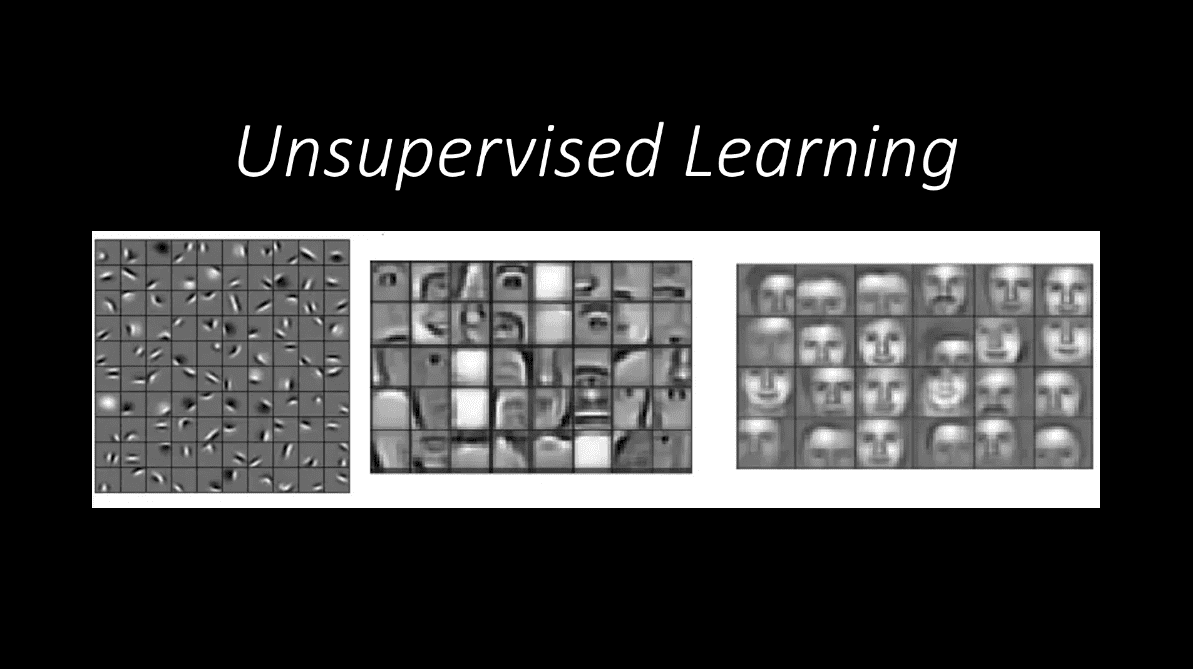

- Unsupervised Learning: Here, the model is trained on unlabeled data and is tasked with discovering hidden patterns or structures within the data. Clustering and dimensionality reduction are common applications. For instance, unsupervised learning can be used to group similar customers for targeted marketing or reduce the complexity of large datasets for easier analysis.

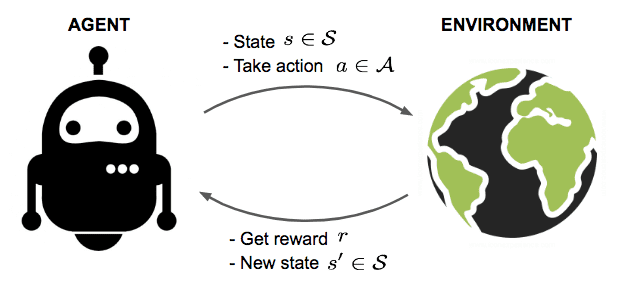

- Reinforcement Learning: This branch of ML focuses on training agents that learn to make decisions by interacting with an environment and receiving feedback in the form of rewards or penalties. In RL, agents balance exploration (trying new actions to learn more about the environment) and exploitation (choosing actions based on current knowledge to maximize rewards). It’s commonly used in robotics, gaming (e.g., training game-playing bots, AlphaGo), and autonomous systems (e.g., self-driving cars).

4. Deep Learning and Neural Networks.

Deep Learning is a subfield of machine learning that has gained remarkable attention in recent years. It focuses on training artificial neural networks with multiple layers (deep neural networks) to perform complex tasks.

5. Neural Networks.

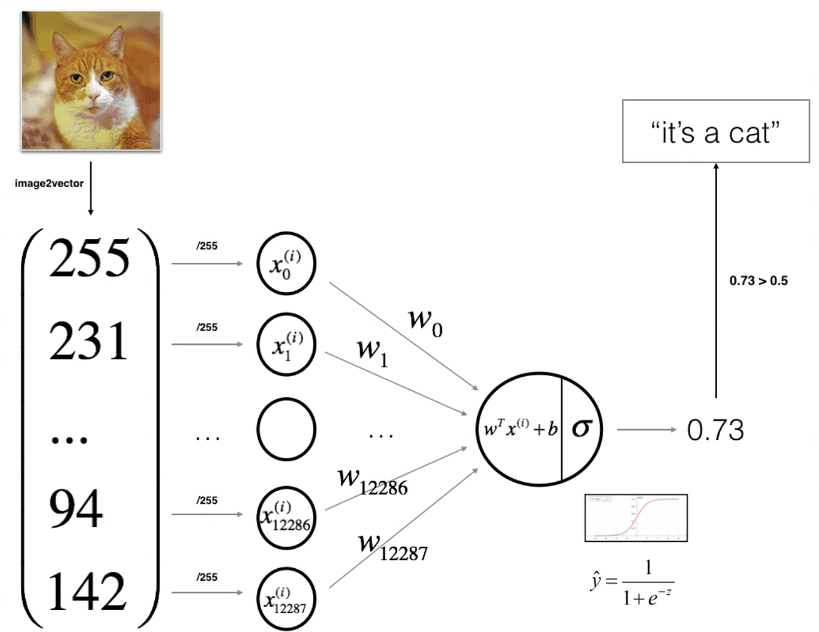

At the core of deep learning are artificial neural networks, which are inspired by the structure and functioning of biological neurons.

These networks consist of layers of interconnected nodes or neurons, each with associated weights.

Deep learning models learn from data through a process called training. During training, the model adjusts its weights to minimize the difference between its predictions and the actual data, iteratively improving its performance.

Deep learning has been instrumental in various applications, including image and speech recognition, natural language processing, autonomous vehicles, and more. Convolutional Neural Networks (CNNs) excel in image-related tasks, while Recurrent Neural Networks (RNNs) are used for sequential data, and Transformers are highly effective in natural language understanding.

5. Generative Adversarial Networks (GANs):

GANs consist of two neural networks—the generator and the discriminator—that are trained adversarially. The generator aims to create data that is indistinguishable from real data, while the discriminator tries to tell real from fake.

GANs have been used for image generation, style transfer, data augmentation, and even deepfake creation.

5. Transformers:

Transformers represent a breakthrough in natural language processing (NLP) and have significantly impacted AI models’ capabilities in understanding and generating human language.

The core innovation in Transformers is the attention mechanism, which allows the model to weigh the importance of different parts of input data when making predictions. This mechanism enables capturing long-range dependencies in data, making it especially effective in NLP.

BERT and GPT: Bidirectional Encoder Representations from Transformers (BERT) and Generative Pre-trained Transformers (GPT) are two prominent examples of transformer-based models. BERT excels in understanding context in language, while GPT is adept at generating human-like text.

Transfer Learning: Transformers facilitate transfer learning, where models pretrained on vast datasets can be fine-tuned for specific tasks with relatively small datasets. This has greatly improved the efficiency of training AI models.